How to Do the “Retrieval” in Retrieval-Augmented Generation (RAG)

The project is available online on Towards AI.

Efficient and accurate text retrieval is a cornerstone of modern information systems, powering applications like search engines, chatbots, and knowledge bases.

It is the first step in RAG (Retrieval-Augmented Generation) systems.

RAG systems, first use text retrieval to find the answer to our query and then use an LLM to answer. RAG allows us to “chat with our data”.

In this article, we explore the integration of dense retrieval, BM25 lexical search, and transformer-based reranking to create a robust and scalable text retrieval system.

The project leverages the strengths of each technique:

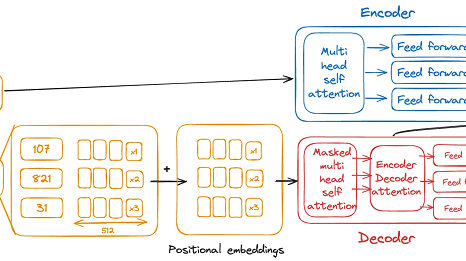

Dense Retrieval: Captures semantic meaning by embedding text into high-dimensional vector spaces, enabling similarity-based search. BM25 Lexical Search: Performs efficient keyword matching to quickly narrow down relevant results. Transformer-Based Reranking: Uses Hugging Face cross-encoders to evaluate and rank query-document pairs based on semantic relevance, ensuring precision in the final output. This hybrid approach optimizes both computational efficiency and retrieval accuracy, making it well-suited for use cases where context, relevance, and speed are critical.

Continue your read on Towards AI.

Leave a Comment