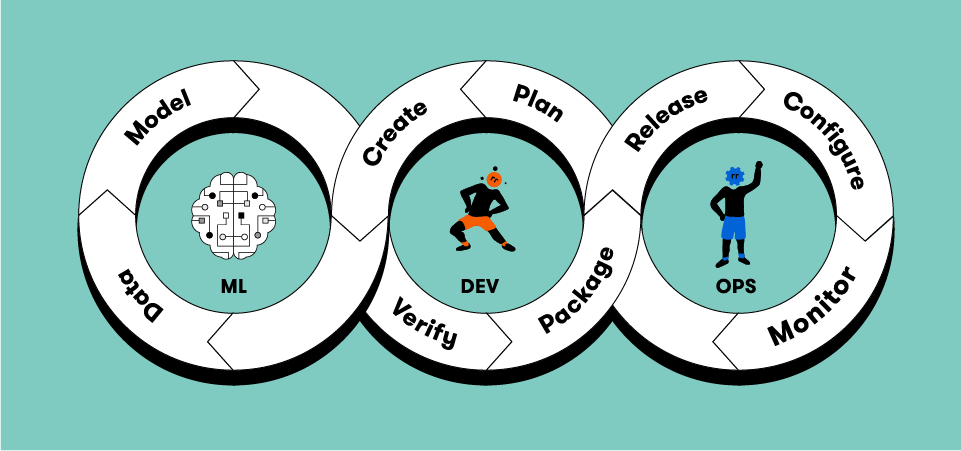

MLOps Lifecycle with MLFlow, Airflow, Amazon S3, PostgreSQL

The project is available on my GitHub

MLOps Pipeline with  +

+  +

+  +

+

A complete Machine Learning lifecycle. The pipeline is as follows:

1. Read Data➙2. Split train-test➙3. Preprocess Data➙4. Train Model➙

➙ 5.1 Register Model

➙ 5.2 Update Registered Model

Telco Customer Churn dataset from Kaggle.

Tech Stack

: For experiment tracking and model registration

: Store the MLflow tracking

: Store the registered MLflow models and artifacts

: Orchestrate the MLOps pipeline

: Machine Learning

: R&D

How to reproduce

- Have Docker installed and running.

Make sure docker-compose is installed:

pip install docker-compose

- Clone the repository to your machine.

git clone https://github.com/Deffro/MLOps.git - Rename

.env_sampleto.envand change the following variables:- AWS_ACCESS_KEY_ID

- AWS_SECRET_ACCESS_KEY

- AWS_REGION

- AWS_BUCKET_NAME

- Run the docker-compose file

docker-compose up --build -d

Urls to access

- http://localhost:8080 for

Airflow. Use credentials: airflow/airflow - http://localhost:5000 for

MLflow. - http://localhost:8893 for

Jupyter Lab. Use token: mlops

Cleanup

Run the following to stop all running docker containers through docker compose

docker-compose stop

or run the following to stop and delete all running docker containers through docker

docker stop $(docker ps -q)

docker rm $(docker ps -aq)

Finally, run the following to delete all (named) volumes

docker volume rm $(docker volume ls -q)

Leave a Comment